The project is a fork of usef-kh/fer. We made an application for detecting user-provided human face image. And we use the code from atulapra/Emotion-detection to implement a real-time camera emotion detector.

Github: https://github.com/blueskyson/emotion-recognition

It is the final project of CSIE7606 - Computer Vision and Deep Learning by Jenn-Jier (James) Lien at National Cheng Kung University. This course not only allows students to gain an in-depth understanding of the theoretical knowledge of computer vision, machine learning, and artificial intelligence-deep learning, and to analyze how the principles of deep learning are combined with the development of artificial intelligence and computer vision, but also focuses on the technical functionalities. These are explained through the instructor’s industry-academic collaboration experiences, including facial expression analysis, cloud-based intelligent monitoring services, automated optical inspection, intelligent robotic arm control, and autonomous vehicles.

1. Introduction

Facial emotion recognition plays an important role in human-computer interactions and can be applied to digital advertisement, gaming, and customer feedback assessment.

One specific emotion recognition dataset that encompasses the difficult naturalistic conditions and challenges is FER2013. Human performance on this dataset is estimated to be 65±5% [2], which means it is hard even for humans to recognize the emotions. This work adopts VGG network and shows various experiments to explore different optimization algorithms and learning rate schedulers. The authors thoroughly tune the model and hyperparameters to achieve state-of-the-art results at a testing accuracy of 73.28 %.

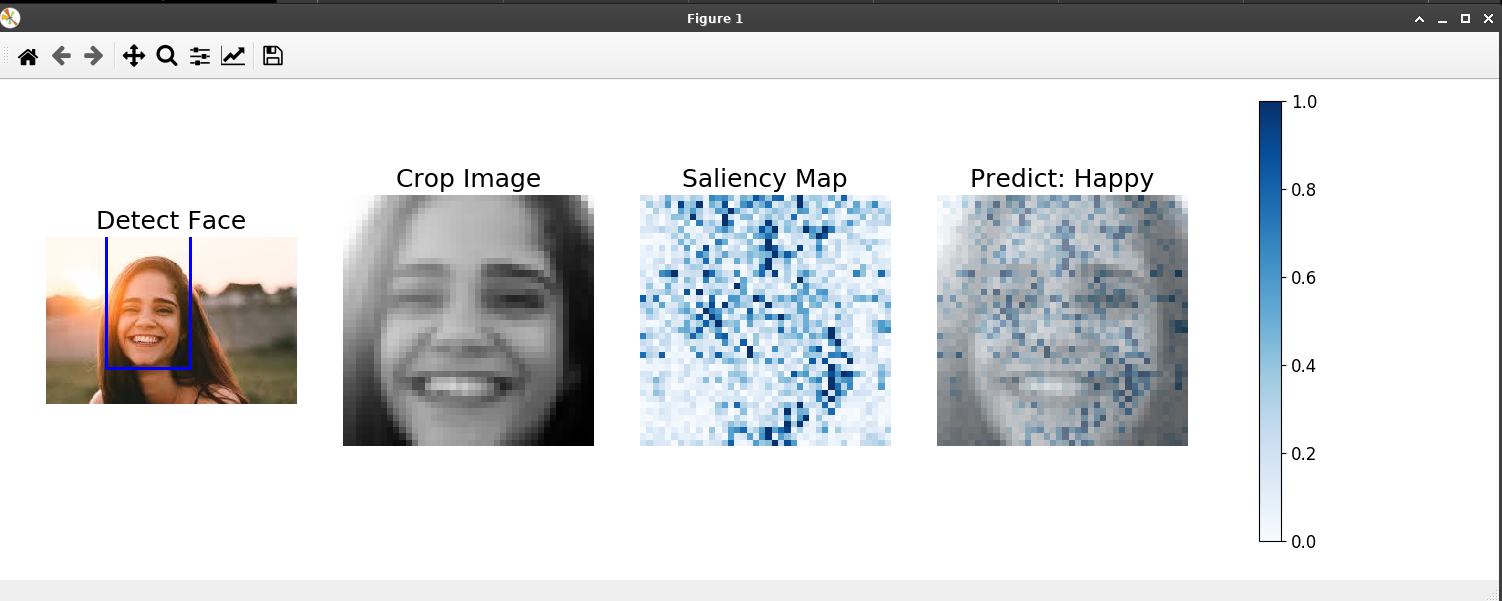

We have reproduced this paper’s work. Furthermore, we apply Haar filter to make an application that detects emotions from user-provided images and integrate our model in real-time camera emotion detector.

2. System Architecture

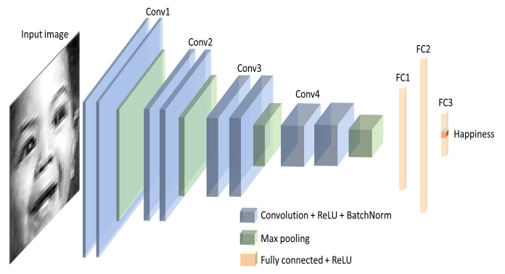

VGGNet is a classical convolutional neural network architecture used in pattern recognition. The network consists of 4 convolutional stages and 3 fully connected layers.

Each convolutional stage contains two convolution blocks and a max-pooling layer. Each convolution block contains a convolutional layer, a ReLU activation, and a batch normalization layer. Batch normalization is used to speed up learning process, reduce the internal covariance shift, and prevent gradient vanishing or explosion.

The first two fully connected layers are followed by a ReLU activation. The third fully connected layer is for classification.

Overall, convolutional stages are responsible for feature extraction, and fully connected layers are trained to classify the images.

- Conv1 to Conv4 are convolution stages, which are used for feature extraction.

- A blue rectangle represents a convolution block.

- A green rectangle represents a max-pooling layer.

- FC1 to FC3 are fully connected layers, which are used to classify emotions from features extracted by former stages.

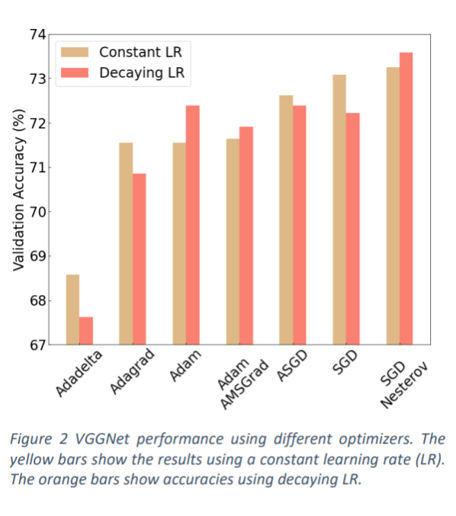

Optimizers

The authors test 6 different optimizers, including SGD, SGD with Nesterov Momentum, Average SGD, Adam, Adam with AMSGrad, Adadelta, and Adagrad. This experiment is done under two configurations:

Constant LR: Fixed learning rate of 0.001. Decaying LR: Decaying the initial learning rate of 0.01 by a factor of 0.75 if the validation accuracy plateaus for 5 epochs (RLRP scheduling).

They find that the SGD with Nesterov Momentum is the best.

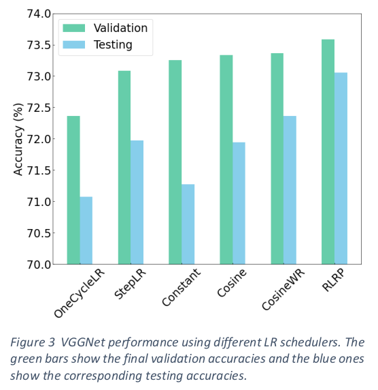

Learning Rate (LR) Schedule

The authors test 5 different LR schedulers, including Reduce Learning Rate on Plateau (RLRP), Cosine Annealing (Cosine), Cosine Annealing with Warm Restarts (CosineWR), One Cycle Learning Rate (OneCycleLR), and Step Learning Rate (StepLR).

Using SGD with Nesterov momentum optimizer.

They find that the RLRP is the best since it monitors the current performance before deciding when to drop the learning rate.

3. Data Collection

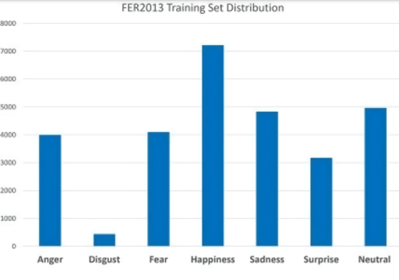

FER2013 is a dataset composed of 35587 grey-scale 48x48 images of faces classified in 7 categories: anger, disgust, fear, happiness, sadness, surprise, neutral.

The dataset is divided in a training set (28709 images), a public test set (3589 images), usually considered the test set for final evaluations.

FER2013 is known as a challenging dataset because of its noisy data with a relatively large number of non-face images and misclassifications. It is also strongly unbalanced, with only 436 samples in the less populated category, “Disgust”, and 7215 samples in the more populated happiness.

This dataset analogous to real world challenges in the field.

4. Our Experimental Results

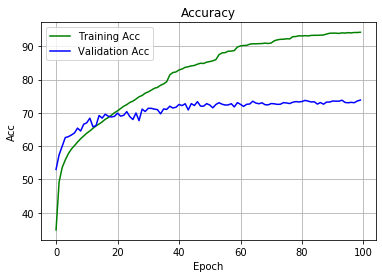

Accuracy

In our experiment the model had great gains from epoch [0 to 30], and continues to advance [epoch 31~100] thanks to the RLRP learning rate scheduler.

Terminating at the 100th epoch, the resulting testing set accuracy is 71.52415%, which is on par with that of the original paper (73.06%) we based our experiment on.

This is state-of-the-art on FER2013 without extra training data.

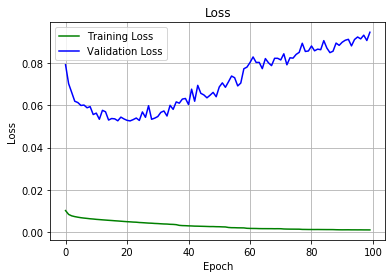

Loss

During the training phase, the training loss never equaled to validation loss, indicating that there is no underfitting.

Nor was the training loss much lower than the validation loss, indicating that no overfitting occured during the process.

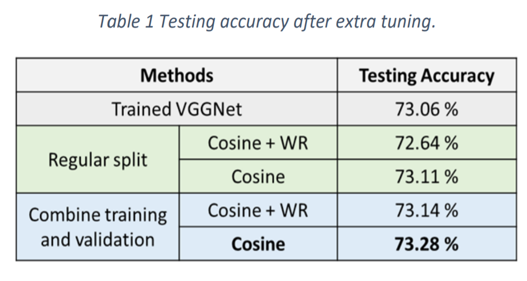

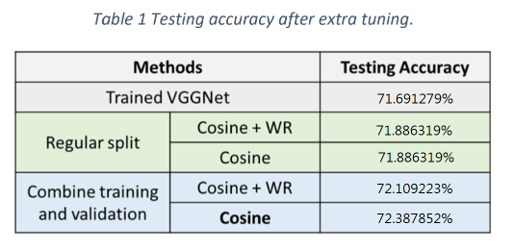

The Paper’s Original Fine Tuning Result

The authors tune their best model (73.06% accuracy) for 50 extra epochs using both cosine annealing schedulers with lr=0.0001. Cosine Annealing performs best here and improves the model by 0.05 %. Cosine Annealing with Warm Restarts negatively impacts performance in this tuning stage reducing the accuracy by 0.42 %. Both models perform better after training on the combined dataset resulting from the combined training and validation data.

Paper’s final best model achieves an accuracy of 73.28%.

Our Fine Tuning Result

Tune our best model (71.6912% accuracy) for 20 extra epochs using both cosine annealing schedulers with lr=0.0001.

Both models perform better after training on the combined dataset resulting from the training and validation data.

Our final best model achieves an accuracy of 72.3878%.

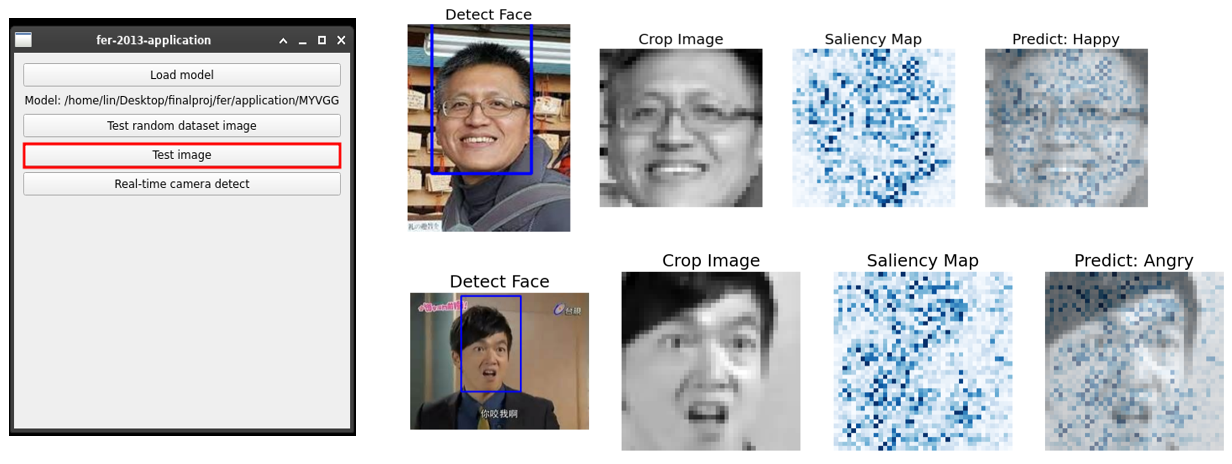

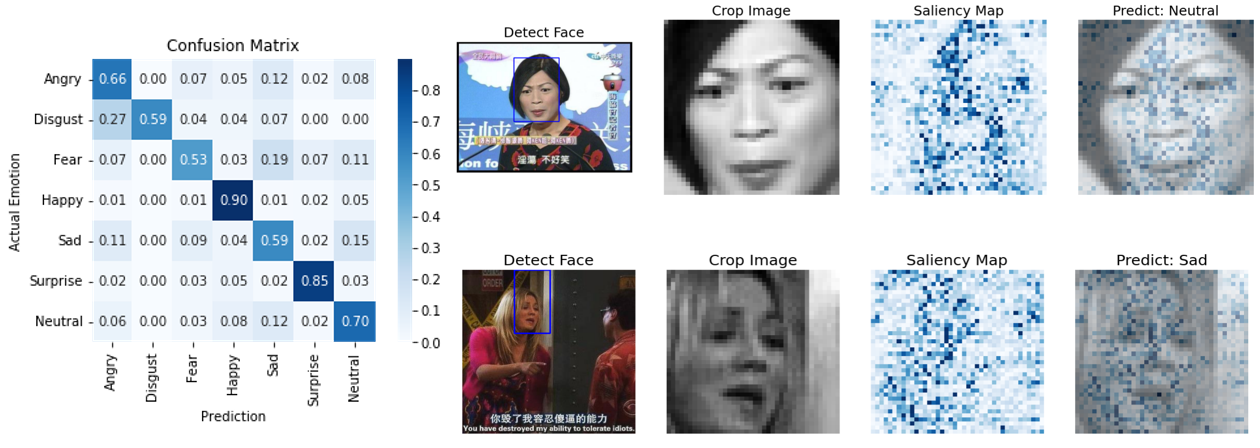

5. Demo

Correct Recognitions:

Incorrect Recognitions:

Real-time Camera Detector:

6. Conclusion and Future Works

This paper achieves single-network state-of-the-art classification accuracy on FER2013 using a VGGNet. We thoroughly tune all hyperparameters towards an optimized model for facial emotion recognition. Different optimizers and learning rate schedulers are explored and the best initial testing classification accuracy achieved is 73.06%, surpassing all single-network accuracies previously reported. We also carry out extra tuning on our model using Cosine Annealing and combine the training and validation datasets to further improve the classification accuracy to 73.28%.

For future work, we plan to explore different image preprocessing techniques on FER2013 and investigate ensembles of different deep learning architectures to further improve our performance in facial emotion recognition.

We got our best model by training 100 epochs and reproduced the fine tuning experiments with 20 extra epochs. The result shows that combine training and validation with Cosine Annealing performs best, which is consistent with the paper.

7. Additional Work

We use ResNet34V2, published by Microsoft Reasearch. In contrast to the original ResNet34, ResNet34V2 puts batch normalization layer and ReLU activation before the convolution layer.

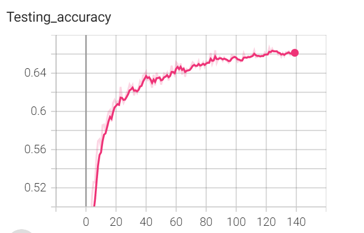

In our experiment the model had great gains from epoch[0~20], and continues to advance [epoch 31~100] thanks to the RLRP learning rate scheduler.

Terminating at the 140th epoch , the resulting testing set accuracy is 66%. The accuracy is lower than VGGNet, but it is a little bit better than human performance.

Comparison

| Our VGGNet | Paper’s VGGNet | ResNet34V2 | SVM | |

|---|---|---|---|---|

| Testing Accuracy | 72.387852% | 73.28% | 66% | 31% |

| epoch | 120 | 350 | 140 | X |

| fine-tuning | final 20 epochs with combined training and validation dataset | final 50 epochs with combined training and validation dataset | X | X |

8. Reference Sources

- [1] Yousif Khaireddin, Zhuofa Chen, “Facial Emotion Recognition: State of the Art Performance on FER2013”, arXiv:2105.03588

- [2] Roberto Pecoraro, Valerio Basile, Viviana Bono, Sara Gallo, “Local Multi-Head Channel Self-Attention for Facial Expression Recognition”, arXiv:2111.07224

- [3] Shivam Gupta, “Facial emotion recognition in real-time and static images”, DOI: 10.1109/ICISC.2018.8398861

- [4] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun, “Identity Mappings in Deep Residual Networks”, arXiv: 1603.05027

Referenced Repositories

- Code for the paper “Facial Emotion Recognition: State of the Art Performance on FER2013”, https://github.com/usef-kh/fer

- Real-time Facial Emotion Detection using deep learning, https://github.com/atulapra/Emotion-detection

- ResNet for pytorch, https://github.com/pytorch/vision/blob/main/torchvision/models/resnet.py

Comments powered by Disqus.